QEMU Virtual Machine PCIe Device Passthrough Using vfio-pci

2017.09.16 | Yuki ReaWhen running a QEMU virtual machine (VM) on a Linux based operating system, we have the ability to dedicate PCIe devices to VMs using the vfio-pci kernel module so that the VM can control them directly. You may want to do this for many reasons, a USB controller so anything plugged into that controller's USB ports are connected to the VM, a SATA controller for booting or controlling drives directly, a network card, sounds card, or even a graphics card for high performance graphics acceleration inside the VM. This guide will show how this can be done with out blacklisting the kernel module driver of the device you want to pass through. For example, if you have multiple devices that use the same kernel module, blacklisting it would prevent all of the devices in the system from using the kernel module. With this method you can have multiple devices in the same system which use the same kernel module, you could even have two identical devices (like two of the same GPU) and keep one for the host and pass one into the VM.

The system I will be using for this tutorial is a Lenovo Thinkpad T420s running Debian 9.1 and Linux kernel 4.12. This tutorial applies to all machines even ones using different hardware and operating systems. I currently use my desktop with an AMD FX 8350 CPU and GIGABYTE 990FXA-UD3 motherboard (also running Debian 9.1 and kernel 4.12) to run a VM with a GTX 1070 and USB controller passed through for mining Ethereum, occasionally playing games, and running the Adobe CC software suite. I have used this same method on Arch-Linux, OpenSUSE, and Ubuntu, all with success, although I do not recommend using any Linux kernel older than 4.4.

Ensuring Your Hardware is Capable of Intel VT-d or AMD-vi

First step of this process is to make sure that your hardware is even capable of this type of virtualization. You need to have a motherboard, CPU, and BIOS that has an IOMMU controller and supports Intel-VT-x and Intel-VT-d or AMD-v and AMD-vi. Some motherboards use different terminology for these, for example my 990FXA-UD3 lists AMD-v as

Preparing Your System

Next you will have to pass the correct kernel parameters to the Linux kernel to enable IOMMU.

Open the following text file in your text editor of choice:

/etc/default/grub

You should see a line with the following text:

/etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet"

Append the following options inside the quotations separated by spaces. intel_iommu=on or amd_iommu=on depending if you have an Intel or AMD CPU. AMD users may also want to try iommu=pt iommu=1 instead of

This line should now look something like this:

/etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on"

Save the file and then run the following command:

update-grub

Once that has completed, reboot the system.

It is good to note that are also more advanced kernel parameters pertaining to IOMMU that may be useful to you, but for now the two previous ones should be enough.

See The kernel’s command-line parameters for a list of more kernel parameters.

After enabling IOMMU you can now check the IOMMU device map to ensure that there is proper seperation between the devices you wish to pass into your VM and the ones you wish to remain attached to the host. There are a few ways to do this, the simplest is to run the following command:

ls /sys/kernel/iommu_groups/*/devices

The output should look something like this:

... /sys/kernel/iommu_groups/11/devices: 0000:00:1f.0 0000:00:1f.2 0000:00:1f.3 ... /sys/kernel/iommu_groups/14/devices: 0000:0d:00.0 ...

You can see that group 11 has 3 devices in it which means they are not isolated from each other. If you wanted to pass one of these devices through to our VM, you could not just pick one, you have to pass through every device inside the IOMMU group.

To see what devices these PCIe IDs correspond to, you can run another command:

lspci

The output should look something like this:

... 00:1f.0 ISA bridge: Intel Corporation QM67 Express Chipset Family LPC Controller (rev 04) 00:1f.2 SATA controller: Intel Corporation 6 Series/C200 Series Chipset Family 6 port SATA AHCI Controller (rev 04) 00:1f.3 SMBus: Intel Corporation 6 Series/C200 Series Chipset Family SMBus Controller (rev 04) ... 0d:00.0 USB controller: NEC Corporation uPD720200 USB 3.0 Host Controller (rev 04)

Thankfully the 3 following devices that are all in IOMMU group 11 do not need to be used by the guest:

... 00:1f.0 ISA bridge: Intel Corporation QM67 Express Chipset Family LPC Controller (rev 04) 00:1f.2 SATA controller: Intel Corporation 6 Series/C200 Series Chipset Family 6 port SATA AHCI Controller (rev 04) 00:1f.3 SMBus: Intel Corporation 6 Series/C200 Series Chipset Family SMBus Controller (rev 04) ...

You need to run the lspci command again but this time you will need more information, specifically the device IDs of the device you wish to pass through. Run the following commands to create a text file and then print the output of the lspci command to it, you will need it later. Replace /home/user/lspci-output.txt with the path to the file you wish to save the output.

touch /home/user/lspci-output.txt lspci -vnn > /home/user/lspci-output.txt

Then open the file with your text editor of choice or run the following command to print it to your terminal. Again replacing /home/user/lspci-output.txt with the path to the file you saved the output in.

cat /home/user/lspci-output.txt

The output should look like this, Note the device IDs of the devices you wish to pass through, they are the two four character strings separated by a colon, I have highlighted it for you in this example:

/home/user/lspci-output.txt

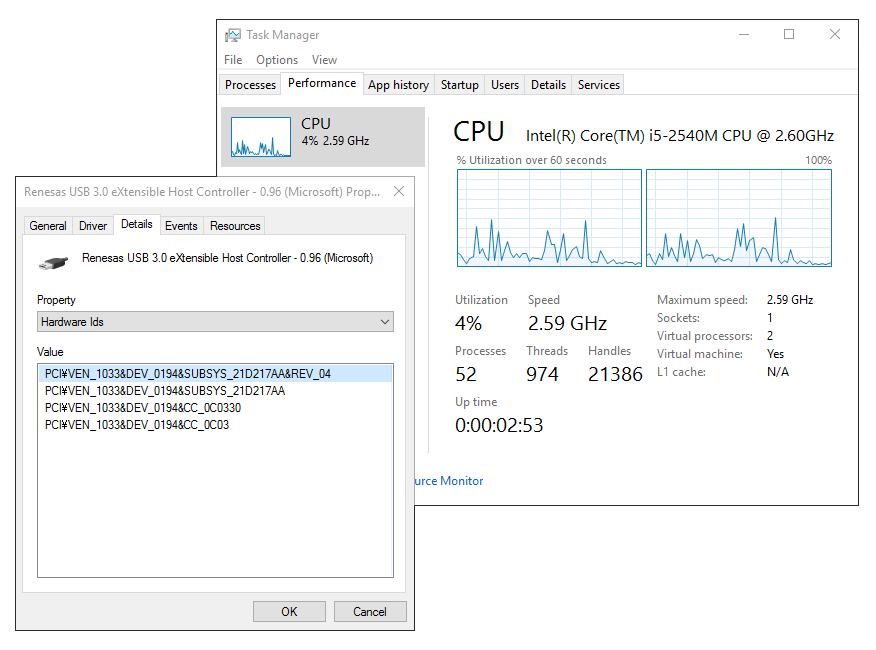

... 0d:00.0 0c03: 1033:0194 (rev 04) (prog-if 30 [XHCI]) Subsystem: 17aa:21d2 Flags: bus master, fast devsel, latency 0, IRQ 16 Memory at f0c00000 (64-bit, non-prefetchable) [size=8K] Capabilities: <access denied> Kernel driver in use: xhci_hcd Kernel modules: xhci_pci

Create and open the following file in your text editor of choice:

/etc/modprobe.d/vfio.conf

Enter the following text adding the device IDs of the devices you wish to pass through to your VM separated by commas.

/etc/modprobe.d/vfio.conf

options vfio-pci ids=1033:0194

Some devices (such as many graphics cards) need to be bound to the vfio-pci driver early in the boot process. You could do this by just blacklisting the driver for that device, but that is a poor and unnecessary solution as it prevents other devices from using the same driver. A better way is to create a module alias for the device you wish to pass through. To do this you will need to run the following command in order to obtain the modalias ID for your device. Replace 0000:0d:00.0 with the PCI ID of your device.

cat /sys/bus/pci/devices/0000:0d:00.0/modalias

The output should look like this:

pci:v00001033d00000194sv000017AAsd000021D2bc0Csc03i30

Add the output of the previous command to /etc/modprobe.d/vfio.conf while adding alias at the beginning of the line and vfio-pci at the end.

/etc/modprobe.d/vfio.conf

alias pci:v00001033d00000194sv000017AAsd000021D2bc0Csc03i30 vfio-pci options vfio-pci ids=1033:0194

After making changes to the /etc/modprobe.d/vfio.conf file, you will need to update your initramfs for any changes to take effect. Do this by running the following command, it will create a new initramfs for each Linux kernel on the system:

update-initramfs -u -k all

After the command has finished, reboot your system before continuing to the next step.

Before you can continue to writing the script that will run your VM, you should ensure that your devices have been configured correctly. Do this by attempting to unbind your device/s from the kernel module they were using and bind them to the vfio-pci kernel module. To do this, run the following three commands. Replace 0000:0d:00.0 with the PCI ID of your device and xhci_hcd with the kernel driver in use of your device from the file you created earlier with the output of the lscpi -vnn command.

modprobe vfio-pci echo '0000:0d:00.0' > /sys/bus/pci/drivers/xhci_hcd/unbind echo '0000:0d:00.0' > /sys/bus/pci/drivers/vfio-pci/bind

After running those three commands, run the lspci -vnn command again to see if the kernel driver in use has changed to vfio-pci.

lspci -vnn

The kernel driver in use should now read vfio-pci, if it does then you can continue to the next step, writing the script. If it does not, that means one of the previous steps was forgotten or was done incorrectly, go back now and check everything.

... 0d:00.0 0c03: 1033:0194 (rev 04) (prog-if 30 [XHCI]) Subsystem: 17aa:21d2 Flags: bus master, fast devsel, latency 0, IRQ 16 Memory at f0c00000 (64-bit, non-prefetchable) [size=8K] Capabilities:Kernel driver in use: vfio-pci Kernel modules: xhci_pci

Writing The Script

Now you are ready to start creating the script to start the VM. Create a file somewhere on the system that you wish to use as the start script for your VM and then run the following command to make it executable. Replace /home/user/startvm.sh with the path to your start script.

chmod +x /home/user/startvm.sh

Below is an example script that I have made, copy it and paste it into your VM start script. You will need to make modifications to this script in order for it to work on your particular configuration. Start by changing and/or adding the PCI and device IDs to match the devices you wish to attach to your VM. You may also need to change things like, networking configuration, VM display configuration, and boot drive paths. Read through the comments I have made in the script, they explain what each part of the script does.

/home/user/startvm.sh

1 #!/bin/bash 2 3 #------------------------------------------------------------------------- 4 5 # load required kernel modules and get required devices ready for the VM 6 function initvm { 7 8 # load vfio-pci kernel module 9 modprobe vfio-pci 10 11 # unbind 0000:0d:00.0 from xhci_hcd kernel module 12 echo '0000:0d:00.0' > /sys/bus/pci/drivers/xhci_hcd/unbind 13 14 # bind 0000:0d:00.0 to vfio-pci kernel module 15 echo '0000:0d:00.0' > /sys/bus/pci/drivers/vfio-pci/bind 16 17 } 18 19 #------------------------------------------------------------------------- 20 21 # run the qemu VM 22 function runvm { 23 24 qemu-system-x86_64 \ 25 -enable-kvm \ 26 -cpu host \ 27 -smp sockets=1,cores=2,threads=1 \ 28 -m 4G \ 29 -rtc base=localtime,clock=host \ 30 -device vfio-pci,host=0d:00.0 \ 31 -drive file=/dev/sda,format=raw \ 32 -net nic,model=e1000 \ 33 -net user \ 34 -usbdevice tablet \ 35 -vga qxl 36 37 # Use the following options for PCIe GPU pass through 38 # -device vfio-pci,host=00:00.0,multifunction=on,x-vga=on \ 39 # -display none \ 40 # -vga none \ 41 42 # Use the following options for networking using a TAP interface 43 # -net tap,ifname=tap0,script=no,downscript=no \ 44 45 # The following will use the host's first physical drive as boot device 46 # -drive file=/dev/sda,format=raw \ 47 48 # Use the following to improve Windows guest performance 49 # -cpu host,hv_relaxed,hv_vapic,hv_spinlocks=0x1fff \ 50 51 # For VMs with an Nvidia GPU attached, you must add the following 52 # options to bypass the Nvidia driver's virtualization check. 53 # -cpu kvm=off,hv_vendor_id=null \ 54 55 # For more information about QEMU and it's configuration options, please 56 # visit the following web address: https://www.qemu.org/documentation/ 57 58 } 59 60 #------------------------------------------------------------------------- 61 62 # make devices usable again to the host 63 function unloadvm { 64 65 # unbind 0000:0d:00.0 from vfio-pci kernel module 66 echo '0000:0d:00.0' > /sys/bus/pci/drivers/vfio-pci/unbind 67 68 # bind 0000:0d:00.0 to xhci_hcd kernel module 69 echo '0000:0d:00.0' > /sys/bus/pci/drivers/xhci_hcd/bind 70 71 } 72 73 #------------------------------------------------------------------------- 74 75 # execution order 76 77 initvm 78 runvm 79 unloadvm 80 exit 0

Please refer to the following link for QEMU documentation, it will be very useful when writing your VM script.

QEMU Documentation

You should now be ready to try running your script. Don't be frightened by any errors, they are your friend, pay attention to what they say as they usually tell you exactly what is broken or where to look to find out. A common mistake when adding QEMU arguments across many lines in a script is leaving a space or other character after one of the \ characters at the end of each line. If there is a trailing space or character it will cause QEMU to ignore any arguments on following lines. I would love to hear about your success, or even your failures. Please feel free to share them with me emailing or tweeting me.